Discord parental controls are a frequent topic among parents whose kids love gaming and online chats. But does Discord have parental controls like other social platforms?

Not exactly – while there isn’t a built-in parental control feature, there are still important privacy and safety settings that can help protect your child. In this guide, we’ll explain how to make Discord safe for your child, what risks to be aware of, and how tools like Canopy can help you filter harmful content.

What is Discord?

App Store and Google Play Description: “Discord is your place to talk. Create a home for your communities and friends, where you can stay close and have fun over text, voice, and video. Whether you’re part of a school club, a gaming group, a worldwide art community, or just a handful of friends that want to spend time, Discord makes it easy to talk every day and hang out more often.”

Originally aimed at gamers, it was a communication tool that could be used to talk about specific games and interests, and could be integrated with Twitch, Spotify, and Xbox Live. Groups are formed based on topic or social connection, and from there, the messaging — text, voice, image, video, and screen sharing — begins. Some consider it to be an advanced version of “Skype for gamers,” and those who play games like Fortnite are familiar with it.

Discord is free to start using, but you can upgrade for extended features and perks with a monthly or annual fee.

What’s the Age Rating for Discord?

Discord is rated 12+ on the App Store and “Teen” (13 +) on Google Play. The platform itself states that users must be at least 13 years old — but, as with most social apps, there’s no reliable age verification. That raises a common concern for parents who often ask: is Discord safe for kids?

While many teens use Discord to chat about games, art, or music, its open-server structure means they can easily encounter explicit content or strangers. The absence of built-in parental controls on Discord makes it similar to other platforms where parents already manage risks, for instance, blocking videos on YouTube to prevent exposure to inappropriate media.

Like with TikTok and Instagram, it’s essential to understand each app’s privacy tools and combine them with external safeguards. Guides such as Instagram parental controls, TikTok parental controls, and how to block YouTube on iPhone explain how small setting changes can dramatically improve online safety.

If your child is exploring social apps independently, reviewing these protections early helps build digital responsibility — just as families might discuss what to do if your child sends inappropriate pictures or how to talk to your child about inappropriate pictures. In the same way, open conversations about Discord’s communities and boundaries will help ensure your child uses it wisely.

Are There Any Discord Parental Controls or Safety Settings?

There are currently no true parental controls for Discord, but parents can still adjust several in-app privacy and safety settings to reduce exposure to explicit or unsafe content. Unlike structured systems such as iOS parental controls or Windows 10 parental controls, Discord’s options rely heavily on the user’s cooperation — meaning your child can change them at any time.

Start by reviewing the Privacy & Safety tab together. Here, you can turn on automatic scanning of direct messages to filter inappropriate images and block messages from non-friends. Parents familiar with other tools, like how to block websites on router or how to block a website on Chrome mobile, will recognize the same principle — prevention at the source, rather than constant monitoring.

You can also adjust who can add your child as a friend and restrict access to adult or public servers. It’s worth pairing these settings with a trusted text monitoring app or parental control app for cell phones to help detect risky conversations or content across devices.

Some families prefer a layered approach — using Discord’s settings alongside broader digital wellness strategies such as mental health activities for kids. Combining these steps provides both technical protection and emotional readiness, helping your child navigate online communities safely and responsibly.

How to Make Discord Safer for Kids (Step-by-Step Guide)

If you’re wondering how to make Discord safe for your child, the process starts with adjusting the right settings and combining them with broader online protection strategies. Unlike structured tools such as HBO Max parental controls or Peacock parental controls, Discord’s filters depend on individual choices — meaning parents need to walk through each step with their child.

1. Enable Message Scanning

Turn on the “Keep Me Safe” option so Discord automatically scans direct messages for explicit media. Parents who have learned how to block videos on Facebook or how to block content on TikTok will find this similar: the goal is to stop inappropriate material before it reaches the screen.

2. Limit Direct Messages and Friend Requests

Restrict direct messages from non-friends and tighten friend permissions so only people with your child’s unique Discord Tag can connect. Think of it as using Snapchat parental controls — it’s about controlling who can initiate contact.

3. Join Private Servers Only

Encourage participation in invite-only servers created by friends or classmates. If your child also uses streaming platforms, this is similar to how Netflix parental control lets you restrict age-inappropriate profiles — safe spaces begin with trusted access.

4. Review Servers and Activity Regularly

Every few weeks, check which servers your child has joined and talk through new contacts or content.

5. Add a Layer of Device-Level Protection

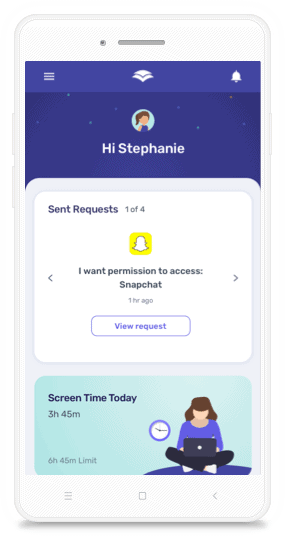

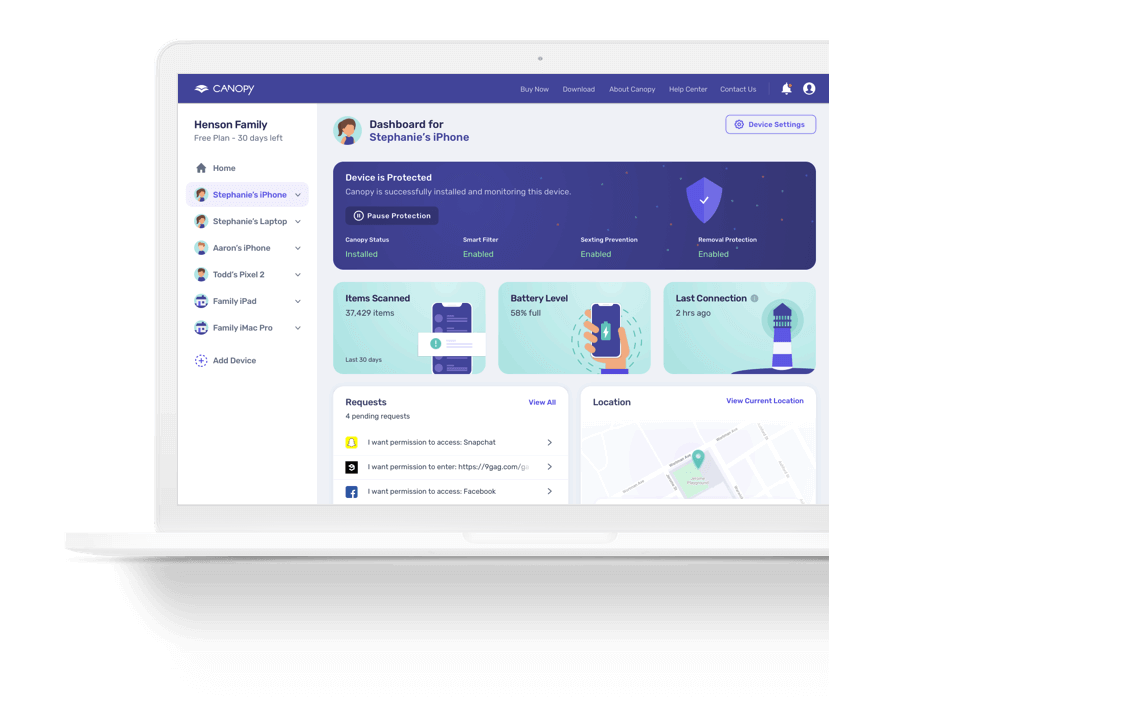

Since Discord doesn’t have built-in parental controls, many parents use an external safeguard such as Canopy — known for its positive reviews and ability to block explicit media in real time.

Beyond privacy settings, maintaining digital balance matters too. Families exploring detox child from screen time routines or researching best screen time control app options can pair those habits with regular discussions about empathy, boundaries, and online kindness. That combination of tools and trust helps your child develop long-term digital responsibility.

How Parents Can Review Discord Data and Activity

If you’re concerned about your child’s interactions on Discord, you can request what the platform calls a data package — a downloadable file that contains your child’s account information, message history, and server participation.

To access this data package, open User Settings → Privacy & Safety → Request all of my Data. Once requested, Discord can take up to 30 days to send a download link to the account’s registered email. Reviewing it together can be a healthy way to talk about online choices, much like using internet safety tips for parents as conversation starters around responsible digital habits.

While Discord’s report doesn’t offer ongoing monitoring, parents can pair it with protective features such Canopy’s real-time filtering system, which prevents explicit content from appearing even before it’s opened.

If that report reveals concerning messages or risky patterns, it’s important to have open discussions instead of reacting punitively. You might use approaches similar to how to support a child with mental health issues — helping them process stress or peer pressure.

By combining Discord’s transparency tools with external safeguards and empathy-driven communication, you give your child a safer space to connect and learn — both online and off.

Discord’s Community Guidelines: What Parents Should Know

Discord prohibits harassment, hate speech, threats of violence, evading user blocks and bans, and malware and viruses. They do not allow “adult content” in age-gated channels, content that sexualizes minors, or sexually explicit content shared without consent.

They have rules prohibiting content that does any of the following: glorifies or promotes suicide or self-harm, includes images of sadistic gore or animal cruelty, promotes violent extremism, or facilitates the sale of prohibited or potentially dangerous goods. There are also rules around pirated software and stolen accounts, and users may not promote, encourage, or engage in any illegal behavior.

Understanding Discord Terms: Servers, Channels, and DMs Explained

Similar to other apps, like TikTok, Discord has its own set of vocabulary. You can find more information on the terms below and others in the Discord Dictionary. These are the ones that will be particularly helpful, as a parent, to understand.

- Servers are “spaces” on the platform. They function as groups that center around a particular topic and are created by a community or friend group. Servers can be invite-only and private or public and open to anyone. All uses can create servers.

- Channels are created within a server. Think of them as subtopics or subgroups. At times, they are defined based on how people will communicate about the topic, such as a text channel where users can post messages, upload files, and share images, or a voice channel where users can connect with voice or video calls and share their screens through the Go Live feature.

- Direct Messages (DMs) are private messages (one-on-one).

- Group Direct Messages (GDMs) are group messages with up to nine people and require an invite to join.

- Go Live is sharing a screen with the other people in a server, DM, or GDM.

Dangers and risks associated without Discord parental control

Despite the safety precautions that Discord has taken recently, there are concerns for kids on the popular app. ABC News reported on how someone who was arrested for sexually exploited two teens met one of them on the app. Deputies interviewed for the story called Discord “the perfect spot for predators to meet kids.”

An article in The Wall Street Journal talked about how prevalent bullying can be, especially among gamers: “And that’s where racial slurs, sexist comments, politically incorrect memes and game-shaming are prevalent, users say.” The reporter goes on to share that it took a mere 15 minutes of participating on the app to “find porn and Nazi memes, without specifically searching for them.”

Similar to the rest of the Internet, there is inappropriate and explicit content on Discord. What may be different is that kids are in groups where this content is sent to them in messages or groups. They don’t have to go out and search for it, and there is no Discord parental control to prevent it.

One young adult Discord user talked about how his peers, when he was a teenager, used this and other apps like it to hide things form things parents. They would create groups like “Biology Lab” and then talk about anything but school-related topics, typically ones that would lead them to get in trouble if the conversations were discovered.

Parents, according to his experience, check regular text messages and online search history. Internet filters and parental control apps also interact with those more often. Some filters don’t work with apps like this, and the messages can be easily hidden on a smartphone, so, in his experience, parents weren’t aware of inappropriate and dangerous content being shared there and other places like it.

Dangers and Risks for Kids on Discord

Even though Discord has taken steps toward safer communication, the lack of built-in parental controls for Discord means that kids can still encounter inappropriate or risky content. Public servers and group chats often mix strangers with friends, creating situations similar to what parents see when researching how to block YouTube to keep kids from stumbling upon explicit material.

The most common issues reported on Discord include exposure to adult content, harassment, and unsolicited messages from strangers.

Predators can exploit anonymity on the platform, reaching out through private servers or direct messages. That’s why combining communication skills with technology is crucial — understanding why parents should limit screen time and teaching kids to recognize warning signs helps build long-term awareness.

Cyberbullying can also thrive in group chats and gaming communities. Parents who already explore how to prevent cyberbullying know that emotional well-being online is as important as content filtering.

Finally, kids may hide chats or join misleadingly named groups to avoid detection, just as some do on other social platforms. Discussing trust and responsibility early helps ensure your child feels safe bringing problems to you before they escalate.

The Positive Side of Discord Communities

The same article from The Wall Street Journal referenced above went on to share about the good that can come from an online community. One group that was formed on Discord to get away from bullying in other groups “became like family… Several of them recently sent diapers to a dad who had told the group he was having a hard time providing for his family.”

Is Discord Safe for Kids? How to Decide for Your Family

You know your child better than anyone. You know what it’s like to talk through various app settings and parental controls. And you know if they are ready to engage in it without Discord parental control available. As with all other messaging apps and social media platforms, it’s not only about the content that your child can access but also their maturity and ability to navigate it wisely.

You can prepare your child for how you hope they would respond if they are exposed to pornography and talk about parental control apps that your family uses. And, together, you can discuss the realities of Discord, how to be a responsible digital citizen, and if your child is ready to participate in the platform.

How Canopy Works with Discord (and What It Can Block)

Canopy can filter out pornographic content (images and videos, not live-streaming) on the web browser version of Discord. If your child is using Discord, you can remove permission to use the app in the App Management tool of Canopy, making it only possible for the system to be accessed from a web browser. If you would prefer that your child not use Discord, then you can not only remove permission to use the app but also block the URL from the Website Management tool. Canopy helps you protect those you love most.

FAQs

Does Discord have parental controls?

No, Discord doesn’t have true parental controls. However, parents can adjust privacy settings and use tools like Canopy to block explicit content.

Can you put parental controls on Discord?

You can’t directly restrict Discord from within the app, but you can manage privacy options, limit direct messages, and block the app with parental control software.

Is Discord safe for kids?

Discord can be safe when used responsibly, but exposure to inappropriate content and strangers is possible. Parents should monitor use and adjust safety settings.

How can I make Discord safe for my child?

Enable message scanning, restrict friend requests, review joined servers, and use Canopy to block explicit media.

What age is Discord recommended for?

Discord’s minimum age is 13, but many experts recommend parental guidance for teens under 16 due to the platform’s open nature.